AI Audio&Video Translation and Dubbing Tool

Based on LLMs, professional-level translation, capable of generating both portrait and landscape formats, one-click deployment.

English: 中文

🚀 Project Overview

Krillin AI is a one-stop solution designed for users and developers seeking high-quality video processing. It provides an end-to-end workflow, from video download to the final product, ensuring every frame of your content is extraordinary.

Key Features and Functions:

🎯 One-Click Start: There is no need for complicated environment configuration. Krillin AI supports automatic installation of dependencies, enabling you to quickly get started and put it into use immediately.

📥 Video Acquisition: Integrated with yt-dlp, it can directly download videos via YouTube and Bilibili links, simplifying the process of material collection. You can also directly upload local videos.

📜 Subtitle Recognition and Translation: It supports voice and large model services of mainstream providers such as OpenAI and Alibaba Cloud, as well as local models (continuous integration in progress).

🧠 Intelligent Subtitle Segmentation and Alignment: Utilize self-developed algorithms to conduct intelligent segmentation and alignment of subtitles, getting rid of rigid sentence breaks.

🔄 Custom Vocabulary Replacement: Support one-click replacement of vocabulary to adapt to the language style of specific fields.

🌍 Professional Translation: The whole-paragraph translation engine ensures the consistency of context and semantic coherence.

🎙️ Dubbing and Voice Cloning: You can choose the default male or female voice tones to generate video reading dubbing for the translated content, or upload local audio samples to clone voice tones for dubbing.

📝 Dubbing Alignment: It can perform cross-language dubbing and also align with the original subtitles.

🎬 Video Composition: With one click, compose horizontal and vertical videos with embedded subtitles. Subtitles that exceed the width limit will be processed automatically.

Language Support

Input languages: Chinese, English, Japanese, German, Turkish supported (more languages being added)

Translation languages: 56 languages supported, including English, Chinese, Russian, Spanish, French, etc.

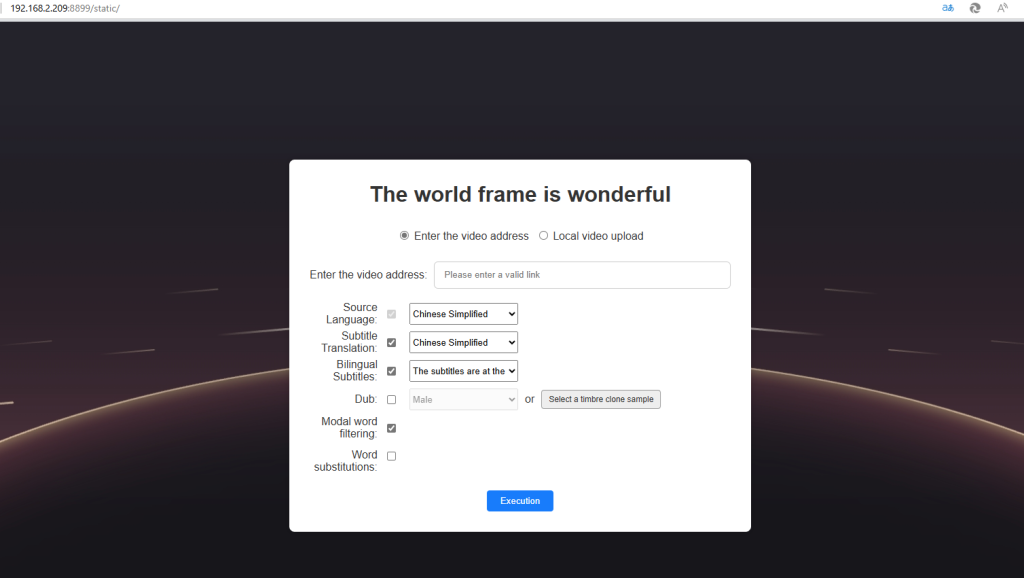

Interface Preview

Quick Start

Basic Steps

- Download the executable file that matches your device system from the release and place it in an empty folder.

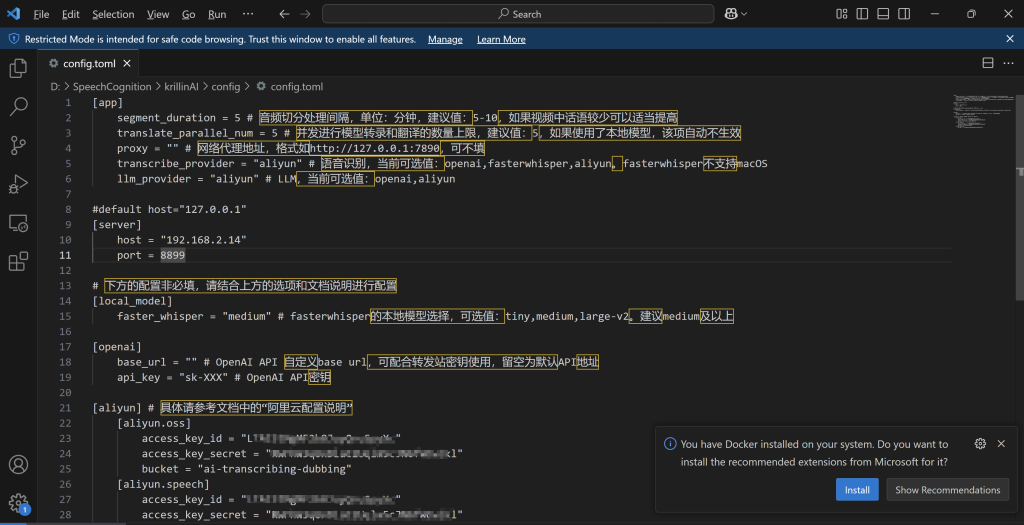

- Create a

configfolder inside the folder, then create aconfig.tomlfile in theconfigfolder. Copy the content from theconfig-example.tomlfile in the source code’sconfigdirectory intoconfig.tomland fill in your configuration information accordingly. - Double-click the executable file to start the service.

- Open a browser and enter

http://127.0.0.1:8888to start using it (replace 8888 with the port you configured in theconfig.tomlfile).

To: macOS Users

This software is not signed, so after completing the file configuration in the “Basic Steps,” you will need to manually trust the application on macOS. Follow these steps:

- Open the terminal and navigate to the directory where the executable file (assuming the file name is

KrillinAI_1.0.0_macOS_arm64) is located. - Execute the following commands in sequence:

sudo xattr -rd com.apple.quarantine /KrillinAI_1.0.0_macOS_arm64

sudo chmod +x ./KrillinAI_1.0.0_macOS_arm64 ./KrillinAI_1.0.0_macOS_arm64

This will start the service.

Docker Deployment

This project supports Docker deployment. Please refer to the Docker Deployment Instructions.

Cookie Configuration Instructions

If you encounter video download failures, please refer to the Cookie Configuration Instructions to configure your cookie information.

Configuration Help

The quickest and most convenient configuration method:

- Select

openaifor bothtranscription_providerandllm_provider. In this way, you only need to fill inopenai.apikeyin the following three major configuration item categories, namelyopenai,local_model, andaliyun, and then you can conduct subtitle translation. (Fill inapp.proxy,modelandopenai.base_urlas per your own situation.)

The configuration method for using the local speech recognition model (macOS is not supported for the time being) (a choice that takes into account cost, speed, and quality):

- Fill in

fasterwhisperfortranscription_providerandopenaiforllm_provider. In this way, you only need to fill inopenai.apikeyandlocal_model.faster_whisperin the following three major configuration item categories, namelyopenaiandlocal_model, and then you can conduct subtitle translation. The local model will be downloaded automatically. (The same applies toapp.proxyandopenai.base_urlas mentioned above.)

The following usage situations require the configuration of Alibaba Cloud:

- If

llm_provideris filled withaliyun, it indicates that the large model service of Alibaba Cloud will be used. Consequently, the configuration of thealiyun.bailianitem needs to be set up. - If

transcription_provideris filled withaliyun, or if the “voice dubbing” function is enabled when starting a task, the voice service of Alibaba Cloud will be utilized. Therefore, the configuration of thealiyun.speechitem needs to be filled in. - If the “voice dubbing” function is enabled and local audio files are uploaded for voice timbre cloning at the same time, the OSS cloud storage service of Alibaba Cloud will also be used. Hence, the configuration of the

aliyun.ossitem needs to be filled in. Configuration Guide: Alibaba Cloud Configuration Instructions

Frequently Asked Questions

Please refer to Frequently Asked Questions